Newsrooms across Asia and Europe have been integrating AI into their workflow progressively over the past few years. With the introduction of Generative AI, they are taking the next steps.

At this year’s ABU-Rai Days, news leaders shared their latest insights and tools.

All India Radio’s Director of News, Nilesh Kumar Kalbhor (pictured) told delegates that his newsroom has benefitted from the Indian Government’s 2018 digital India campaign which lifted the digital skills of school and university students.

“We are well aware of the potential for misuse of AI… we are optimistic but cautious,” he said. In India there is an emphasis on self regulation of AI.

India’s national radio and tv broadcasters have put guidelines in place for using AI, which include mandatory labelling of AI content, and informing people about the potential for misuse of AI.

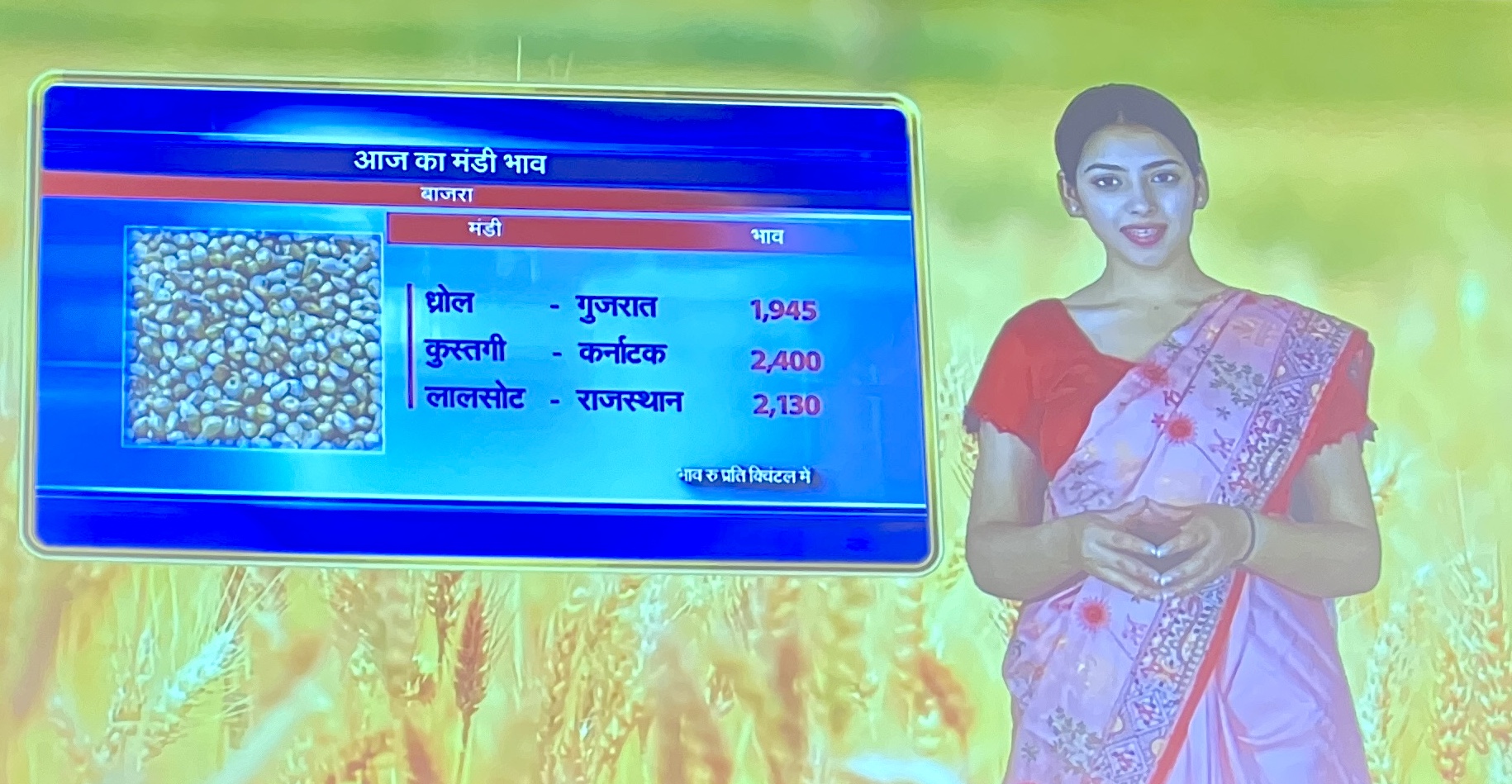

Other uses in the national broadcaster’s newsrooms include automatic language translation, research, and management of databases and library content. AI Generated graphics are being used in schools programs.

“We produce 600 bulletins per day in 90 languages, AI translation is making that more efficient,” explained Nilesh.

Two AI Avatars, AI Krish and AL Bhoomi, now present news stories on the broadcaster’s TV News channel.

“Speech to text is used, but we make sure we have human checking at the last stage before publication because, as a public service broadcaster, we know that our audience cares about accuracy and will tell us if we make a mistake.”

The newsrooms offer WhatsApp chat groups for audiences to interact, they have now added AI Chatbots to monitor feedback in these groups and summarise audience reactions to news reports. “Our newsrooms have become very fast by using these platforms,” said Nilesh.

“The Arab Spring changed our traditional skills and practices of journalism, and the construction of news changed drastically during that time,” said TRT World’s News Director Mevlüt Selman Tecim in an ABU-Rai Days session on AI in Newsrooms.

That was the beginning of Turkish Radio and TV’s newsroom journey with AI. The most significant areas where AI is being used by the Turkish national broadcaster at the moment are: transcribing; voice and image recognition; language translation; localisation and data analysis.

These functions have increased efficiency, sped up the workflow process and enhanced the production process. There was also a need for the broadcaster to rethink its guidelines. “We had to upgrade our style and ethics guides and reinforce our commitment to explaining how we are using AI,” said Tecim.

He presented examples of how chatbots are skewing the answers to questions about the Israel-Gaza war and then comparative discrepancy about how AI answered questions about Israel and Hamas.

Tecim showed how the Avid Ada chatbot has been integrated as an interactive help system for newsrooms. https://connect.avid.com/ada.html . He also outlined how AI CoPilot is being used by news producers, who are turning news packages into digital formats or changing horizontal to vertical orientations automatically with AI

For live feeds, TRT uses AI for Supers and for tracking what is trending on the internet.

Tecim’s advises to use AI “as a tool for creativity, not a replacement,” so that the synergies achieved can lead to innovations and improve organisational structures.

Andrea Gerli from RAI outlined how Italy’s national broadcaster is using AI. They use Microsoft’s CoPilot, integrated with some of the newsroom’s systems:

- As a content assistant to suggest tags and headlines

- To summarise source content from news agencies and other partner sources

- Video analysis – they are testing CoPilot to see if it can reliably identify what are the main issues in a video report or raw audio/video footage

- Searching RAI’s archival reports to quickly identify content that is worth resurfacing in relation to current news topics

All the AI search engines scrape content without consent, and many don’t acknowledge their sources, so it is difficult to see if the answers being presented to the searcher are based on credible sources. Of the available Open AI search engines, Andrea says that Perplexity at least cites its sources, so that searchers can go to the sources and make their own judgements about the credibility of the source and the AI generated answer to the search question.

He also pointed out that all the search engines that are now using generative AI, including Google, Bing and Perplexity, give answers that may decrease web traffic because searchers may feel they no longer need to go to your website if they have a quick answer in a short sentence.

RAI is also experimenting with detection tools for fake content. One of those tools is GPTZero which can identify if text is created by an AI bot. However, as the arms race continues, there are also now tools for people to use to ‘humanise’ their AI created content, such as Undetectable AI.

AI Watermarking tools are also being developed, but they are constantly being overtaken by tools to circumvent watermarking and reverse image searches.

There are some safeguards now being introduced by the tools to prevent famous people being faked, but Andrea is not so sure they always work. And what about people who are not famous, “deepfakes using anyone’s image and profile can easily spread throughout web,” he said.

In the early days of Deep Fakes, experts could spot the fakes by looking at the hands moving or listening to the background noise, but AI deepfake generation tools are now being developed to overcome those methods of detection, and they are easily available on the web to help disinformation providers trick the detection bots.

“The only way to spot deep fakes in future will be your own knowledge, you will need to have a hunch it is not true and you can only do that if you follow the news and have real knowledge from trusted sources.

“In the competition for truth not clicks, public service media will have a special role,” he said.

Alexandru Giboi from the European Alliance of News Agencies continued the point, emphasising that it is not just public service media that have a role to play into the AI generated future, it will be all news agencies who take their role in society seriously.

“All media is at some point public service… Just to produce quality content is not enough, we have to promote it and also support media literacy in our societies, the public will not distinguish it unless we teach them.”

Alexandru said that media which use AI should disclose how they use it and mark content that is AI generated so that their audiences know it comes from a responsible media organisation that is transparent about how it uses AI.

“AI is an opportunity for the media, but we need to get it right now, because it will be difficult to change in the future if we get it wrong… Engage with AI and explore it, but in a way that does not damage the authenticity of the news product or undermine your journalists.”

Other tools for AI detection that were mentioned include:

- Reality Defender https://www.realitydefender.com (a US start up company) can help media detect synthesised voices and faceswapping. “We don’t think there will be a perfect platform for the newsroom to rely on, but this one is useful for now.”

- SG Factchecker, is a Mediacorp Singapore developed prototype via OpenAI’s Custom GPT feature. It delivers “close to human quality fact check success for Singapore news,” according to Mediacorp’s Chua Chin Hon. ChatGPT is providing customised features that can be used by media to create their own tools, but read all the terms and conditions before using them.

- Veri.ai is a tool for media to use their own rights owned content in more ways to generate new revenue streams. Luisa Verdoliva, a professor in the Department of Industrial Engineering at the University of Naples is working with this tool along with a group of research partners to evaluate if it will be useful for media https://www.veritone.com/solutions/media-entertainment

- Face Forensics++ is a data set of fake faces that can be used to check images against, it is a collaboration between various universities https://opendatalab.com/OpenDataLab/FaceForensics_plus_plus. Watermarks are no longer useful in identifying fakes, “Deep fakes now even contain fake digital fingerprints so we need AI to analyse them,” said Luisa.

- Another fake image AI detection tool is Noiseprint, which analyses images using heat and noise maps, See https://grip-unina.github.io/noiseprint and https://www.researchgate.net/figure/The-robustness-of-the-noiseprint-algorithm-against-compression-and-resizing-a-Without_fig7_353717842

There is a lot of effort being undertaken in the research community to build automatic tools that can be applied to detect fake media. Luisa Verdoliva said researchers are moving from asking ‘is this video manipulated’ to now ask the more sophisticated question, ‘is this the person it is claimed to be.’

Olle Zachrison from Swedish Radio reminded delegates that it is easy to clone any media personality because there are many hours of broadcast content that AI can train on. “It’s easy to clone any presenter within one minute with the audio that is online online.”

European broadcasters are working together to fight fakes with AI.

Examples include:

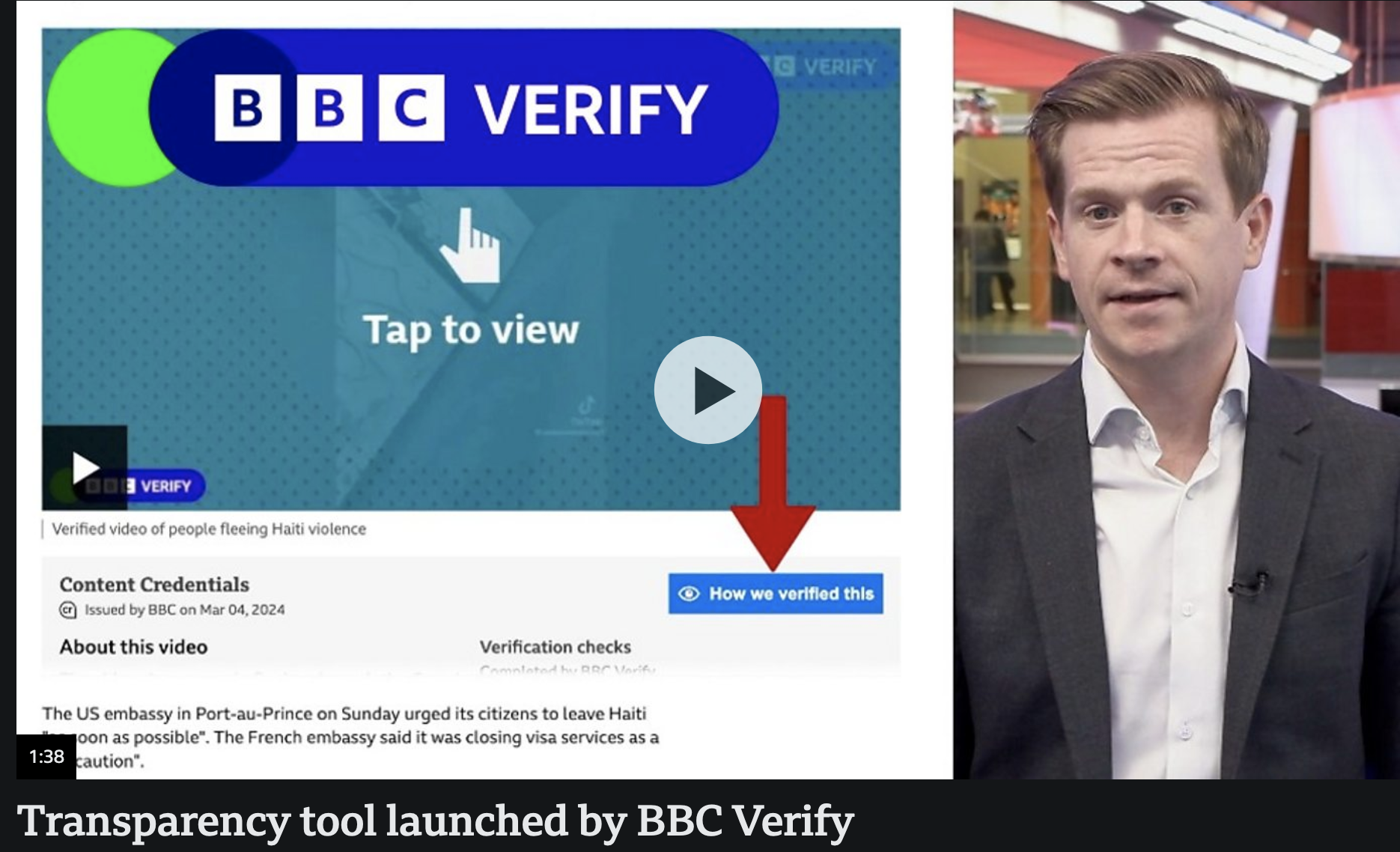

- BBC Verify https://www.bbc.com/news/uk-65650822

- Sweden’s SVT Verifier https://www.svt.se/nyheter/om/svt-nyheter-verifierar

- Spain’s Verific Audio https://reutersinstitute.politics.ox.ac.uk/news/how-spanish-media-group-created-ai-tool-detect-audio-deepfakes-help-journalists-big-election

European newsrooms have joined forces to deliver verified news from trusted broadcasters as part of the European Perspective project, which uses AI to deliver news stories and their original sources to anyone searching through its portal.